We have witnessed significant improvements in computer processors. From core counts to Transistor counts increasing every year. With the increased transistor density it’s size getting smaller and smaller now Moore’s Law is slowly breaking. When we talk about processors, we often discuss transistor counts and frequencies, but we rarely ever talk about cache memory, nor do manufacturers advertise it. However, processor performance is highly dependent on cache memory.

Also Read: Why we don’t have 128-bit CPUs? 64bit Vs 128Bit CPUs

So, What is CPU Cache Memory?

In simple terms, cache is the computer’s fastest memory. Back in the early days of computers, cache memory wasn’t needed because processors were slower, and RAM was obviously slower too, but it was sufficient for those processors. However, after the 1980s, as the performance of the processors are slowly improved, it became crucial to boost up the performance of RAM but it is getting much more costlier with insignificant effect. That’s why a different type of memory was introduced, which is cache memory, also known as SRAM (Static RAM).

SRAM was not initially inside the CPU but came as separate chips on the motherboard, which needed to be installed. Its capacity was also much smaller compared to DRAM, which you commonly refer to as RAM. Cache memory is incredibly faster than RAM. It is 10-100 times faster than RAM. Cache memory responds to CPU requests in just a few nanoseconds. The latency is incredibly low because cache memory is inside the CPU. This means that the distance data needs to travel is minimal, which is one reason for its speed, possibly just a few nanometres away. Cache memory holds the data that the CPU needs most frequently, which is why cache memory data doesn’t need to be refreshed like RAM.

Different Types of Memories In Our Computer

In our computers, we use various types of storage devices. Primary storage, where the operating system and programs are stored, includes SSDs and hard drives. Secondary storage, like RAM, is faster than primary storage but is short-term memory and has way lower capacity than primary storage and the data in RAM is constantly refreshed.

How Does the CPU cache works?

Computer programs and applications are designed in such a way that the CPU can understand the program’s instructions. As I mentioned before, all programs are stored in primary storage, and regardless of the task, when you assign a task to your computer, data flows from primary storage toward the CPU through RAM.

The CPU, or processor, can handle a vast number of instructions per second, but to bring that power into practical use, it needs super-fast memory. RAM doesn’t have the speed to deliver data to the CPU as quickly as it needs, but cache memory does.

A memory controller directs data from RAM to the cache. Depending on on the CPU the memory controller could be inside the CPU or in the North Bridge chipset.

Let’s Understand Memory Hierarchy

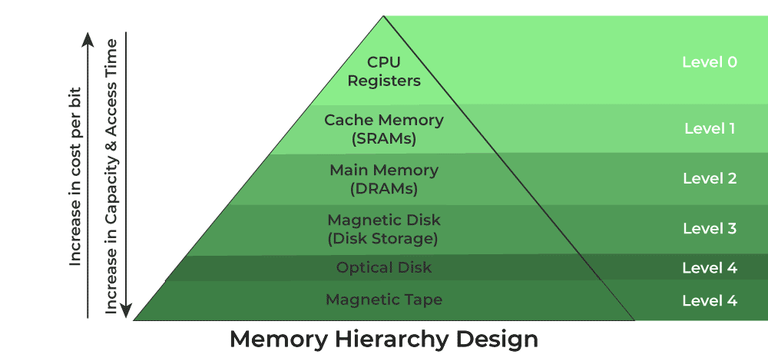

Here, I’d like to introduce a concept called “memory hierarchy.” It classifies memory into five different states based on speed, response time, and capacity: registers, cache, main memory, magnetic disks, and magnetic tapes. In our case, we’re not considering magnetic tapes they are very rare.

As the processor needs a data, it looks for the data in this hierarchy step by step. If it’s not in the registers, it checks the cache memory. If it’s not there, it looks in RAM, and so on. This is how data flows within a computer, in simple terms. The further down this chain you go, the higher the capacity but the slower the speed.

Types Of Cache Memory

Cache memory is divided into three parts: L1, L2, and L3, based on speed and size. Hierarchy is followed here as well. L1 cache has the highest performance, followed by L2, then L3. In terms of capacity, L3 has the highest, followed by L2, and then L1. L1 cache is the fastest memory, and in terms of priority of access, data is first requested from the CPU. When the CPU needs any data, it checks if that data is present in the L1 cache first. Depending on the CPU, the size of the L1 cache can range from 1 MB to around 2 MB. CPUs with larger L1 caches are typically more expensive, and even 1 MB is quite substantial.

The L1 cache is divided into two sections: the instruction cache and the data cache. The instruction cache deals with the instructions that the CPU needs to execute, while the data cache stores the necessary data for the operations instructed.

For example, if there’s an instruction to add two numbers, that information would be in the instruction cache, and the actual numbers would be in the data cache.

L2 cache is slower than L1 cache but offers higher capacity, ranging from 256 KB to 32 MB, depending on the CPU. As an example, in the Ryzen 5 5600X, there’s a 384 KB L1 cache and a 3 MB L2 cache, along with a 32 MB L3 cache. L3 cache offers less performance than L1 and L2 but still much faster than RAM. L3 cache could range from 32 MB to whatever the manufacturer wants.

As I mentioned earlier, in the past, cache memory used to be a separate chip on the motherboard, especially when CPUs had only a single core. Now, CPUs can have varying amounts of cache, with some modern processors like the Ryzen 7 5800X3D having 128 MB of L3 cache.

While L1 and L2 caches are unique to each core, L3 cache is shared among all the cores. Any core can access the data it requires from the shared L3 cache.

How Much Cache Memory is Better?

When it comes to determining how much cache memory is necessary, it’s important to note that this isn’t a parameter you can directly control. While cache memory indeed plays a significant role in CPU performance, it’s not the only factor to consider. Instead, you should focus on assessing the overall performance of the processor.

Different CPUs come with varying amounts of cache memory as provided by the manufacturer, and you can’t adjust or modify this yourself. What matters most is how the CPU performs in real-world scenarios. It’s advisable to look at benchmarks, evaluate its performance in different games and software applications, and then make your choice based on your specific needs.

In essence, you don’t need to fixate on the cache memory alone. Instead, consider the broader spectrum of technologies and overall performance metrics. Technologies like AMD’s Smart Access Memory and Infinite Cache can indeed further enhance performance, so keep an eye on these aspects while making your decision.

Understanding Data Flow in Computers

Now, let’s delve a bit deeper into the data flow. When the processor needs data, it first tries to access the L1 cache. If the data is found there, it’s called a “cache hit.” If the data is not in the L1 cache, the CPU checks the L2 cache. If it’s still not found, it looks in the L3 cache. If the data isn’t in any of these caches, then it accesses the RAM. If it’s not in the RAM CPU access primary storage HDD and SSDs. This process continues until the required data is found.

Don’t Miss: What Is a Serial Port? Why We Still Use Them

In the Concluding Lines…

In conclusion, cache memory is a crucial component in modern processors, significantly impacting CPU performance by providing quick access to frequently used data. While cache size do matter, determining the ideal cache memory amount isn’t within a user’s control. The choice of a processor should be based on a holistic assessment of its overall performance, taking into account benchmarks, real-world usage scenarios.

I enjoyed it just as much as you will be able to accomplish here. You should be apprehensive about providing the following, but the sketch is lovely and the writing is stylish; yet, you should definitely return back as you will be doing this walk so frequently.